Joel Woodward, University of Wolverhampton

As reality capture becomes more widely accessible, the use of point clouds for visualisation applications has become an increasingly important tool within the built environment sector, allowing for highly detailed digital models of the existing buildings to be generated. With integration of immersive technologies, such as Virtual and Augmented Reality (VR/AR), the demand for using Extremely Large Point Clouds (ELPCs) in real-time applications is growing rapidly. These expansive datasets make it possible to capture and represent reality with increased detail and precision, allowing designers and clients to visualise buildings and spaces in a more immersive and intuitive way.

However, as point clouds increase in size and complexity, significant challenges emerge in terms of computational performance, file management and hardware requirements. For immersive visualisation applications, maintaining real-time rendering is critical, with a minimum performance threshold of 60 frames per second (fps) required to ensure usability and avoid issues such as motion sickness.

The aim of this research was to test the feasibility of ELPCs for real-time visualisation in game engines, specifically Unreal Engine 5. The study benchmarks performance across different point cloud sizes, file formats and hardware configurations to provide a practical insight into current strengths and limitations of the technology.

Point clouds are typically generated using LiDAR scanning or photogrammetry, producing millions of 3D coordinates that capture the geometry and surface detail of the built environment. Due to the non-intrusive nature of reality capture, point clouds are widely used in architecture for heritage recording, surveying, and as-built modelling, and increasingly for immersive visualisation through integration with VR platforms.

The 3D spatial data captured provides a range of benefits, as point clouds allow for extreme detail, accuracy and precision. When rendered in immersive environments, they allow users to interact with true-to-life digital representation of the buildings or landscapes. This capability supports greater design decision making, and non-intrusive analysis of historic buildings for future record.

Despite the advantages of point cloud applications, they also present notable challenges. ELPC’s are resource intensive which leads to computational constraints for real time applications due to the size of individual datasets. Rendering such volumes of data in real time is demanding even for high-end machines.

Previous research has attempted to address this through semantic segmentation and deep learning neural networks which divides classifies each point of the point cloud into their respective categories, making the datasets more manageable. While valuable, these approaches often fail to address practical implementation in real-time environments, with limited focus on point clouds at architectural scales.

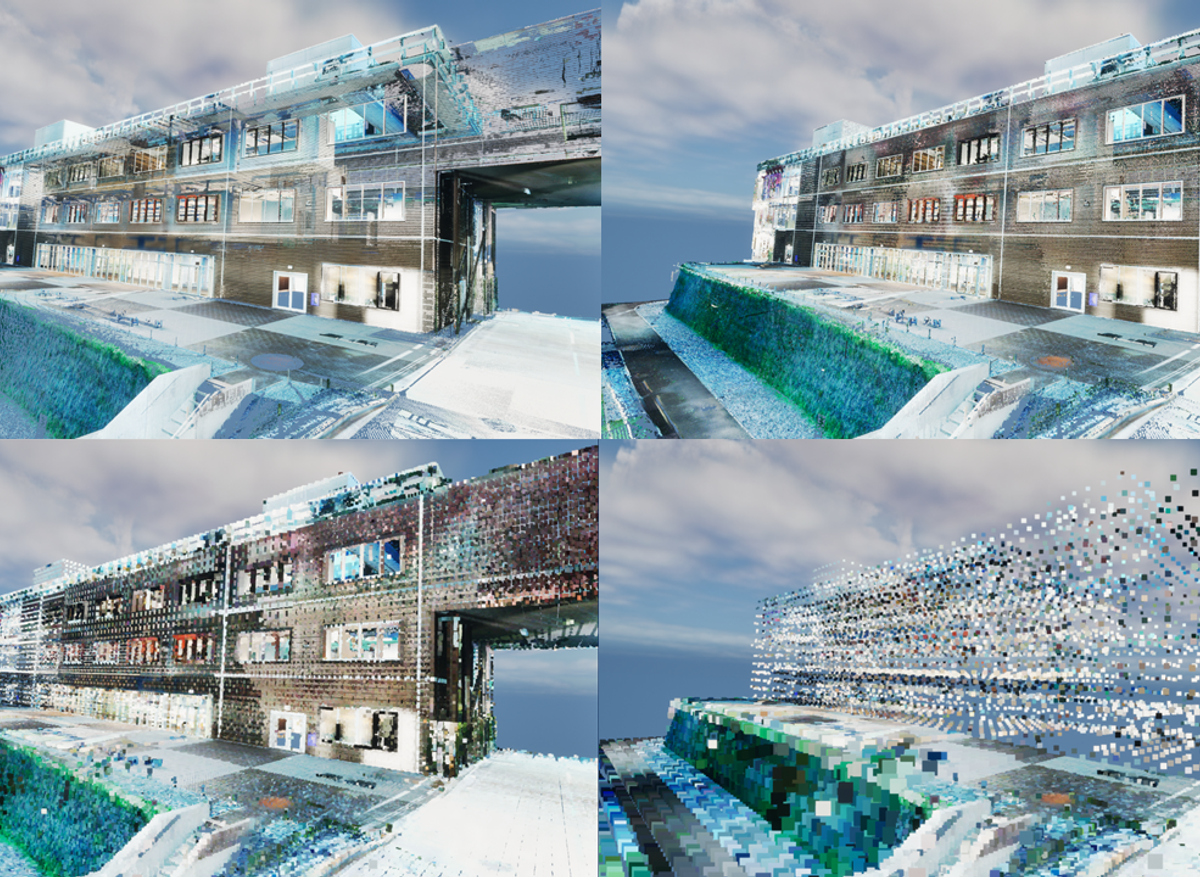

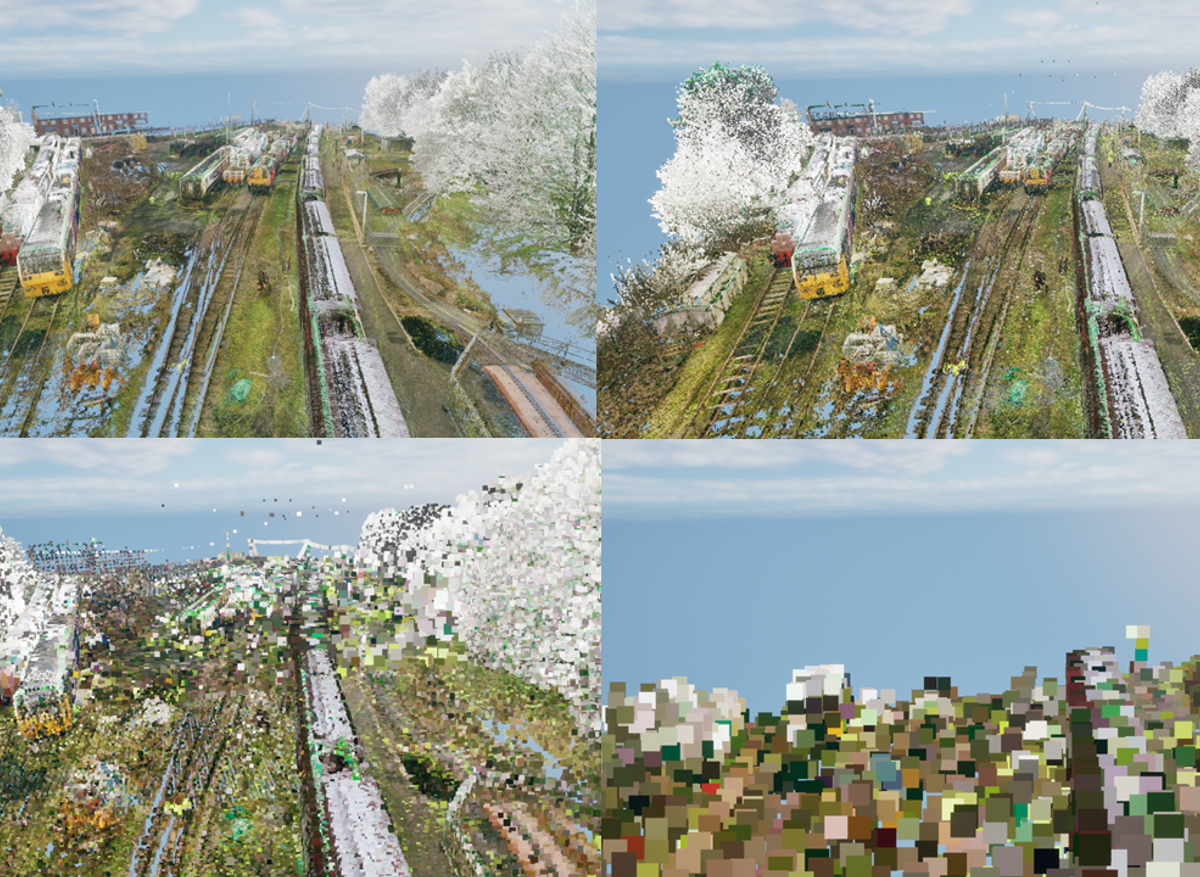

This study focuses on experimentally testing ELPCs directly in a real-time environment, to analyse the feasibility of point clouds for rendering applications on an architectural scale. Employing an experimental methodology with Unreal Engine 5.4.4, the research directly tested ELPCs in a real-time context by importing a series of datasets ranging from 3 to 31GB in both .las and .e57 formats to evaluate the impact of file type. Point budgets were systematically adjusted from 100,000 to 100 million points to identify the performance thresholds, providing practical benchmarks for real time rendering applications.

To address the challenges of rendering ELPCs in real time, future research should focus on methods for splitting ELPCs into separate chunks. By separating ELPCs into smaller chunks, this may allow for larger point clouds to be imported without needing a smaller point budget. For example, sample 3 is a point cloud with 543,341,928 points; however, when rendering only 18% of the scene at a point budget of 100,000,000, 15fps is the highest framerate achieved.

Whilst this may be applicable for smaller point clouds, it is important to note that larger point clouds may not be suited. If the point cloud is being rendered at a 10,000,000 point-budget to achieve the minimum performance requirement, approximately 54 separate chunks would be required which is not feasible. When considering separating point clouds into more manageable chunks, the .e57 may still be the preferred file format compared to alternatives as they are compressed without sacrificing performance. However, utilising deep learning for point cloud optimisation remains the key focus for future research.